Use Google Fonts for Machine Learning (Part 2: Filter & Generate)

Quickly generate hundreds of font images.

Let’s pick up from where we left off in the last post. We cleaned up the JSON annotations and exported it as a CSV file. Now, let’s load the CSV file back in, and filter them with conditions we define and generate PNG images so that we can use the images to train Convolutional Neural Networks.

Directory Setup

Again, I am treating this post as a Jupyter Notebook, so if you want to follow along, open Jupyter Notebook in your own environment. You will also need to download Google Fonts Github repo and generate the CSV annotations. We went over all of these in the last post, so please check it out first.

As you can see from the tree diagram below, the Google Fonts Github repo is downloaded into input directory. All the font files are stored in ofl subdirectory, which is how it is originally set up and I have not made any changes to it. I have also set up notebook directory with .ipynb notebook (this post) as well as the CSV annotation file.

.

├── input

│ ├── fonts

│ │ ...

│ │ ├── ofl

│ │ ...

│ ├── fonts-master.json

└── notebook

└── google-fonts-ml

├── google-fonts-ml.ipynb

└── google-fonts-annotaion.csvLoad CSV Annotations

First, we will load the CSV annotation as pandas.DataFrame.

| # modules we need this time | |

| import matplotlib.pyplot as plt | |

| from PIL import Image,ImageDraw,ImageFont | |

| import pandas as pd | |

| import numpy as np | |

| # load the CSV we created in the last post | |

| df = pd.read_csv('./google-fonts-annotaion.csv') |

Look at Data Summary

Let’s have a look at what we are dealing with here — how many fonts are within each category, how many fonts have which variants, etc. We first filter the list of fonts using a regular expression to select the columns we want to look at. Find all the rows with the value of 1, sum all these rows, and sort by frequency.

| print('Number of fonts in each variant:') | |

| print(df[df.filter(regex=r'^variants_', axis=1).columns].eq(1).sum().sort_values(ascending=False)) | |

| print('\nNumber of fonts in each subset:') | |

| print(df[df.filter(regex=r'^subsets_', axis=1).columns].eq(1).sum().sort_values(ascending=False)) | |

| print('\nNumber of fonts in each category:') | |

| print(df[df.filter(regex=r'^category_', axis=1).columns].eq(1).sum().sort_values(ascending=False)) |

At the time of writing, for instance, there are 325 display, 311 sans-serif, and 204 serif font families. The number of fonts is not the same as the number of font files, however, because the CSV annotation is only counting the font families and there are other factors such as variable fonts, etc.

Filtering Fonts

Now, let’s create conditions to create sub-groups of fonts. Because we took the time to create a nice CSV annotation it is easy to filter fonts according to our own criteria. Say, how many fonts that are thin, regular and thai?

| mask = (df.filter(regex='thin', axis=1).sum(axis=1).astype(bool) & | |

| df.filter(regex='regular', axis=1).sum(axis=1).astype(bool) & | |

| df.filter(regex='thai', axis=1).sum(axis=1).astype(bool)) | |

| df_selected = df[mask] | |

| df_selected |

The reason we sum(axis=1) after filtering is to capture multiple columns if they meet the same condition. For example, if we filter with italic, there are multiple columns that will be included such as bolditalic, mediumitalic, etc. We can combine multiple conditions to a boolean Series object called mask and pass it to our DataFrame object. It will only return the rows that meet the conditions. We use & to only select the fonts that meet all the conditions.

Here is another one — Let’s find the fonts that support chinese and sans-serif.

| mask = (df.filter(regex='chinese', axis=1).sum(axis=1).astype(bool) & | |

| df.filter(regex='sans-serif', axis=1).sum(axis=1).astype(bool)) | |

| df_selected = df[mask] | |

| df_selected |

I get 3 fonts back that meet the criteria — NotoSansHK, NotoSansSC, and NotoSansTC. For some reason, these NotoSans fonts were not included in the Google Fonts Github repo. So, you will have to manually download them if you need to use them.

We don’t want to keep editing the mask object each time we use a different set of filters, so we will make a list with all the filters we want to use and simply iterate over them. These strings are in fact regular expressions and you can try more advanced filtering on your own.

| regex_filters = ['_regular', '_japanese', '_serif'] | |

| df_new = pd.concat([df.filter(regex=regex, axis=1).sum(axis=1).astype(bool) for regex in regex_filters], axis=1) | |

| mask = df_new.all(axis=1) | |

| df.loc[mask] |

How about we make this into a reusable function? This function will take DataFrame and regex filter list as inputs, and outputs a list of font names.

| import re | |

| def filter_fonts(df, regex_filters): | |

| df_new = pd.concat([df.filter(regex=re.compile(regex, re.IGNORECASE), axis=1).sum(axis=1).astype(bool) for regex in regex_filters], axis=1) | |

| mask = df_new.all(axis=1) | |

| return list(df.loc[mask].family) | |

| filtered_fontnames = filter_fonts(df, ['Black', 'cyrillic', '_serif']) | |

| filtered_fontnames |

Some Caveats

Before we move on, I want to point out some issues that I found. We are relying on the font data from the CSV annotations that we generated from the Google Fonts Developer API, but there are some discrepancies.

- First, the number of fonts present in the annotations is not the same as the number of font files we downloaded.

- Some fonts that are not indicated as latin still have latin alphabets.

- NotoSansTC is present in the annotation but is not included in the zip archive. Probably because some languages such as Chinese or Korean have a lot of characters and font files get much bigger compared to latin fonts. It’s just my guess…

- A font called Ballet — the font file exists but it’s not in the annotation. There may be a few other cases like this.

- The name of the folder containing font files is mostly the same as the name of the fonts themselves but there are some exceptions. For example, a font called SignikaNegative is inside a folder called signika.

- A font called BlackHanSans is obviously a very thick black weight, but it is listed as regular weight.

So, the annotation is not the complete representation of the font files we downloaded. As a whole, I think it is still a very valuable resource that we have.

Load Font File Path

We will use glob to load the fonts path. If you only want to filter fonts through weights such as Regular, Bold, Thin, simple filename checking will be enough as the weights/styles are already part of the file names, but you won't be able to use other annotations we looked at such as language subsets or category.

| import glob | |

| # change the root directory according to your setup | |

| ROOT = '../../input/fonts/ofl' | |

| # some fonts are within /ofl/fontname/static directory (2 levels deep) | |

| all_fonts_path = glob.glob(ROOT + '/**/**/*.ttf', recursive=True) | |

| print('number of font files in total: ', len(all_fonts_path)) |

number of font files in total: 8708Filter Fonts

It is time to bring different pieces together into a single function that will take a DataFrame object and the path to font files, and then use the filters we define to return a list of filtered font paths.

| def filter_fonts_get_paths(df, root='./', variants=['_'], subsets=['_'], category=''): | |

| # exceptions | |

| if not variants or variants == [''] or variants == '': variants = ['_'] | |

| if not subsets or subsets == [''] or subsets == '': subsets = ['_'] | |

| # apply filters | |

| regex_filters = variants + subsets + ['_'+category] | |

| df_new = pd.concat([df.filter(regex=re.compile(regex, re.IGNORECASE), axis=1).sum(axis=1).astype(bool) for regex in regex_filters], axis=1) | |

| mask = df_new.all(axis=1) | |

| filtered_fontnames = list(df.loc[mask].family) | |

| # construct file paths | |

| paths = [] | |

| for fontname in filtered_fontnames: | |

| if variants == ['_']: # select all variants | |

| sel = glob.glob(f'{root}/{fontname.lower()}/**/*.ttf', recursive=True) | |

| paths.extend(sel) | |

| else: | |

| for variant in variants: | |

| sel = glob.glob(f'{root}/{fontname.lower()}/**/{fontname}-{variant}.ttf', recursive=True) | |

| for path in sel: | |

| paths.append(path) | |

| print(f'Found {len(paths)} font files.') | |

| return paths | |

| # let's try the function | |

| paths = filter_fonts_get_paths(df, root=ROOT, subsets=['latin'], variants=['thinitalic'], category='sans-serif') |

To describe what the function is doing — What we want to do is to look at both filtered_fontnames and its corresponding file path and check if the file exists. If so, the function returns a path to the file.

From the font names, we can retrieve the file names so that we can load each font and later generate images. We filter fonts with weights, styles, subsets, and categories, but the file names only contain font names and weights/styles.

A few things to note here:

- Depending on your OS, you may need to give case-sensitive filters. For example,

Bold,LightItalicmay work butbold,lightitalicmay not. I am using a Mac, and it is case-insensitive. You also cannot combine mutually exclusive conditions together, such as filteringsans-serifandserifat the same time. You can, however, input a regular expression to create a more complex filter.

Some variable fonts are not selected (ex. CrimsonPro, HeptaSlab) because they have different naming conventions than others. I just ignored them because they are not that many, and the variable fonts that have static versions are searchable through the filters.

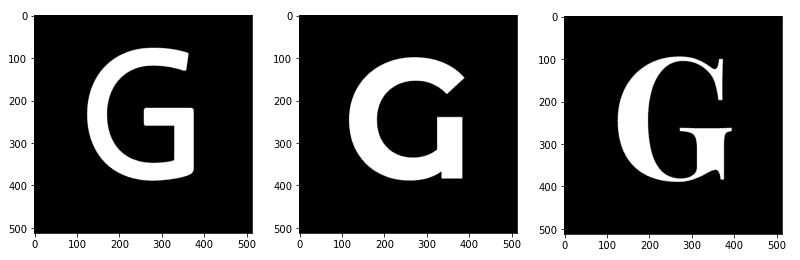

Preview Font Images

It is finally time to see the fonts displayed as images.

| import random | |

| IMG_HEIGHT = 512 | |

| IMG_WIDTH = 512 | |

| paths = filter_fonts_get_paths(df, root=ROOT, subsets=[''], variants=['bold'], category='') | |

| r = random.randrange(0, len(paths)) | |

| # sample text and font | |

| text = "G" | |

| text_size = 400 | |

| x = IMG_WIDTH/2 | |

| y = IMG_HEIGHT*3/4 | |

| font = ImageFont.truetype(paths[r], text_size) | |

| # get text info (not being used but may be useful) | |

| text_width, text_height = font.getsize(text) | |

| left, top, right, bottom = font.getbbox(text) | |

| print('text w & h: ', text_width, text_height) | |

| print(left, top, right, bottom) | |

| # create a blank canvas with extra space between lines | |

| canvas = Image.new('RGB', (IMG_WIDTH, IMG_HEIGHT), "black") | |

| # draw the text onto the text canvas | |

| draw = ImageDraw.Draw(canvas) | |

| draw.text((x, y), text, 'white', font, anchor='ms') | |

| plt.imshow(canvas) |

Everything Pieced Together

Here is the code block that pieces everything together. This time, all the images will be saved as PNG files.

| from IPython.display import clear_output | |

| # display images | |

| monitor = False | |

| # font filtering | |

| df = pd.read_csv('./google-fonts-annotaion.csv') | |

| paths = filter_fonts_get_paths(df, root=ROOT, subsets=['latin'], variants=['bold'], category='serif') | |

| # text setup | |

| text = "G" | |

| x = IMG_WIDTH/2 | |

| y = IMG_HEIGHT*3/4 | |

| text_size = 400 | |

| # create save directory | |

| target_dir = f'./fonts-images/{text}' | |

| !mkdir -p $target_dir | |

| # drawing setup | |

| canvas = Image.new('RGB', (IMG_WIDTH, IMG_HEIGHT), "black") | |

| draw = ImageDraw.Draw(canvas) | |

| for i, path in enumerate(paths): | |

| font = ImageFont.truetype(path, text_size) | |

| draw.rectangle((0,0,IMG_WIDTH,IMG_HEIGHT),fill='black') | |

| draw.text((x, y), text, 'white', font, anchor='ms') | |

| canvas.save(f'{target_dir}/{i:05}.png', "PNG") | |

| if monitor: | |

| clear_output(wait=True) | |

| plt.imshow(canvas) | |

| plt.show() | |

| if i % 200 == 0: | |

| print(f'exporting {i:05}th of {len(paths)}') | |

| print('Exporting completed.') |

Conclusion

Now, you can use the generated images as inputs to Convolutional Neural Networks such as Generative Adversarial Networks or Autoencoders. That is what I am going to be playing the images with. I am sure you can find examples of the model architectures pretty easily on Medium and elsewhere.

I hope you found my post useful — please let me know if you make some fun stuff with the fonts!